Peter Zhizhin

Machine Learning Engineer

Driven Machine Learning Engineer with a strong foundation in Applied Mathematics and Informatics, specializing in NeRF production, Machine Learning, LLM assistants, and speech recognition. Proven ability to bridge research and production, delivering significant performance improvements and contributing to cutting-edge projects. Passionate about scalable ML, autonomous agents, and collaborative problem-solving.

Experience

Machine Learning Engineer, NeRF Production & LLM Assistants

December 2022 – Present

- Visual model responses for Project Astra: research multi-modal LLM (demo).

- Evaluation pipeline for Project Astra, boosted system performance from 12.5% to 50%.

- Brought NeRF Research into production for reconstructing indoor spaces (research blog).

- mipnerf360 → ZipNeRF migration, 25x SWE-hour savings.

- SMERF paper, 3rd author, SIGGRAPH Honorable Mention Award.

Yandex

Machine Learning Engineer, Alice Speech Recognition

September 2020 – December 2022

- Created a pipeline for text recording on Yandex Toloka, fixed 50% of long-tail rare words recognition bugs.

- Introduced a Transformer model (AED) for Speech Recognition in Yandex TV, 2x Word Error Rate improvement.

- Trained a CTC Transformer model for partial speech recognition, -100ms Q90 Endpointer latency, 2x RPS, presentation at DataFest 2021.

- Implemented End-to-End Shallow-Fusion contextual biasing for contact book recognition with OpenFST contextual LM, 16% relative Word Error Rate improvement on calls.

Software Engineering Intern, People Ranking

August 2019 – October 2019

- Response-level caching system, reducing latency for high-profile G Suite users from 15 seconds to 50ms.

- Migrated deprecated data sources, and generated Word2Vec representations.

Education

HSE University

BSc (Hons) in Applied Mathematics and Informatics

September 2015 – July 2020

Specialization in Machine Learning and Applications

GPA: 8.93/10

Projects & Research Contributions

Ilm.c Contributions

August 2024

- Implemented multi-GPU training using the NCCL library and asynchronous cross-GPU gradient accumulation.

- Documented the process in a YouTube video.

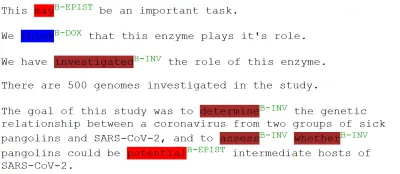

Lifelong Learning for NLP Tasks

November 2019 – June 2020

Explored transformer-based models to detect uncertainty hedges in texts with statistical testing to compare model performance.

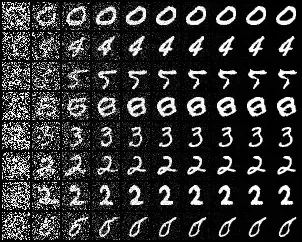

Image Generation via Data Gradient Estimation

November 2019 – December 2019

Implemented sliced and denoising score matching based on recent literature, confirming results and extending research reproducibility.

Obstacle Tower RL Challenge

February 2019 – April 2019

- Implemented Rainbow DQN and Proximal Policy Optimization (PPO) algorithms.

- Won Round 1 and earned $1,000 in Google Cloud credits.

- Contributed to open-source improvements to the TensorFlow-Agents library.

Technical Skills

Languages:

Python C++ Rust (experience)Machine Learning:

PyTorch JAX FairseqDistributed & Concurrent:

NCCL Async Programming Docker KubernetesOthers:

OpenFST Differential Privacy Git Linux CI/CDAdditional Information

Research Interests:

- Reinforcement Learning

- Scalable ML Infrastructure

- Safe and Interpretable AI

Collaboration:

Passion for pair programming, cross-disciplinary team work, and clear, results-driven communication.